I'm an AI researcher but my AI company doesn't use the word AI

My Credentials for AI

I've been working on some form of Machine Learning since 2010 when starting my PhD in surgical robotics. Back then, Neural Networks were a foot-note in Machine Learning textbooks. When working on my master's thesis, I remember looking at Neural Networks and thinking "Why would anyone ever use this technique if it's so convoluted for a simple problem?"

I feel self concious because I dropped out of my PhD program in 2012. At the time, I wanted to go to Silicon Valley and work as a programmer, but no one would hire me because no one cared about AI at the time. I didn't know how to code an app, and that's what people were hiring for at the time.

Neural Networks really started getting going around 2012/2013 with AlexNet, but I was late to the game because I was learning to code websites. I ended up starting my first startup in 2016 as an e-learning app, and it wasn't until 2019 that I got back into neural networks when we built our AI upscaling and then our Virtual Background technology for Vectorly.

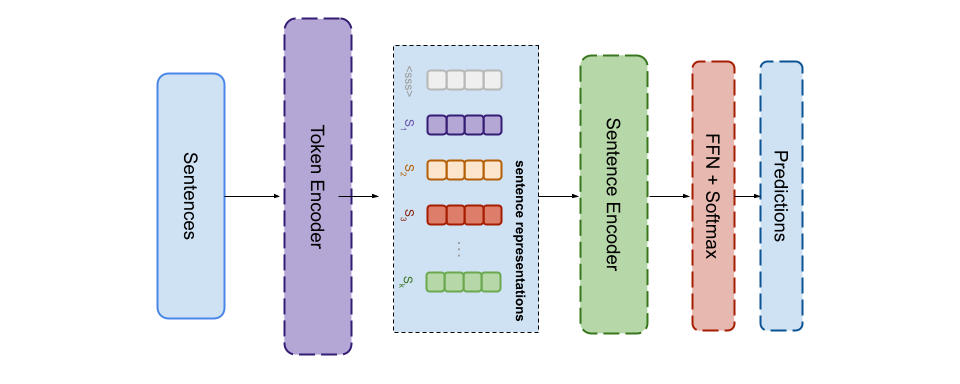

I eventually sold that company, and worked for the acquiring company (Hopin/Streamyard) until 2024. During that time, as ChatGPT came out, I moved mountains to upskill myself and understand how the modern flavor of transformer models worked, and hand-code my own Transformer based chat-bots and translators from basic pytorch operations.

I was never at the forefront of the shiniest demos on TechCrunch, but I did learn the basic operating principles of AI, such as:

- • Datasets are more important than models

- • Benchmarks are more important than architecture

- • Focus on the research problem at hand

You could call me pedantic and shrug that no one cares. But when building Streamyard's AI clipping tool, I realized you could build an AI clipping algorithm that is 100x faster, 100x cheaper and much better than tools like Opus Clips. The people I talked to at Huggingface about my idea thought I was a bit crazy and said this would constitute experimental AI research. They weren't wrong, but I don't think they actually expected me to do the research.

Well, I did the research and it works - I built a 10x better clipping algorithm. I never got a chance to actually build that into Streamyard, so I built it into Katana instead. On the same time, I spent a lot of effort over the past several months to develop a system that could accurately track faces and who is talking in a video (see demo below).

If all you read is tech news, you might shrug - who cares about this? Face tracking existed 10 years ago. But if you actually delve into the world of video podcasting, there are multiple companies like Riverside and Streamyard that are built for recording the kinds of video podcasts you see on YouTube.

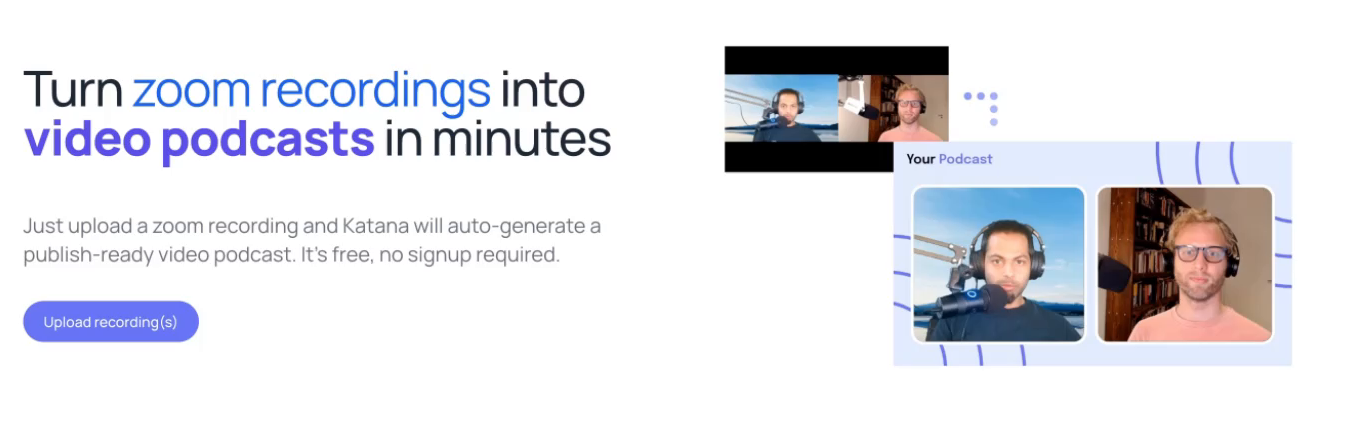

Having talked to dozens of podcasters, the majority of people use tools like Zoom to record video interviews, and so the pitch for Streamyard and Riverside is to convince people that their tools provide better recording quality and some editing features that you don't get with Zoom.

What if you could turn a Zoom recording into a good looking video podcast, with the same or better audio/video quality (remember my years working on AI upscaling?) for like 5% of the effort of learning a new tool like Riverside (and also like, for free)?

So I could have built a simple AI-video-editing tool like Veed or Gling or Diffusion Studio, and build a nice web UI around open source models trained by other people, and added marketing slogans like "AI this, AI that"

But by looking at fundamentals, I realized you can get people what they wanted (a good looking video podcast) in an entirely different way (making a Zoom recording look good), and invalidate the core thesis of well-funded venture-backed companies like Riverside in the process. That's powerful, and that's the kind of fundamentals I like to focus on.

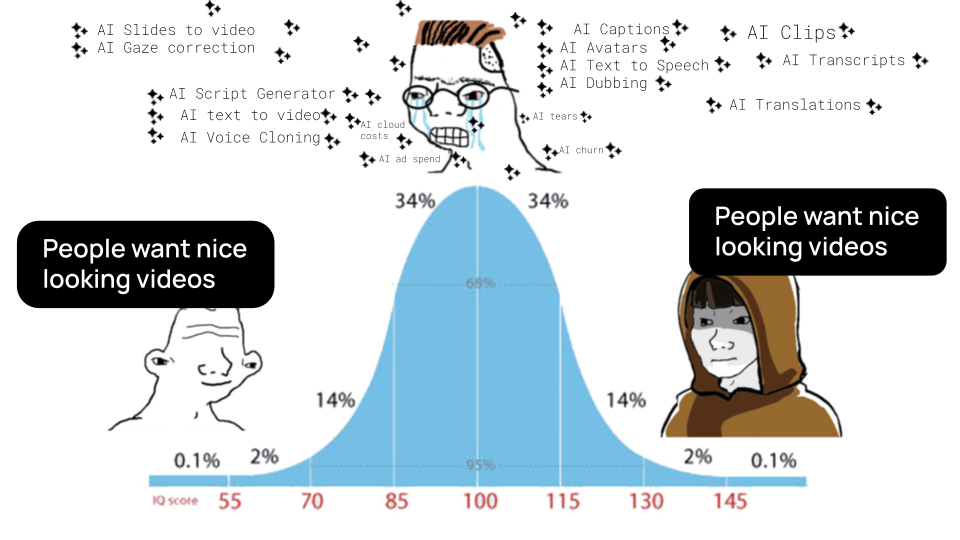

What people actually want

I started Katana around the idea of automating video editing. I specifically focused on video podcasts because that's what I started looking at when working at Streamyard and, as a listener, I like podcasts.

I launched the MVP of Katana in January 2025, and the pitch was to auto-edit a zoom recording into a video podcast.

I then went to a podcasting conference, and just listened to people about their podcasts and what tools they use. For the people for whom I thought Katana might actually be useful, I did a short demo, and what surprised me was that there wasn't just an actual tangible aha moment, but that the aha moment came not from any AI feature, but just from changing the default background to a custom image.

In retrospect, thinking from first principles, it seems painfully obvious.

People want nice looking videos

.Secondarily, you don't want to spend hours just to make it look nice. I mean, intuitively that's what I want as a podcaster, and Descript drives me nuts for how difficult it is to manage visuals, but the simple act of recognizing that other people might might feel the same way was a perspective shift for me.

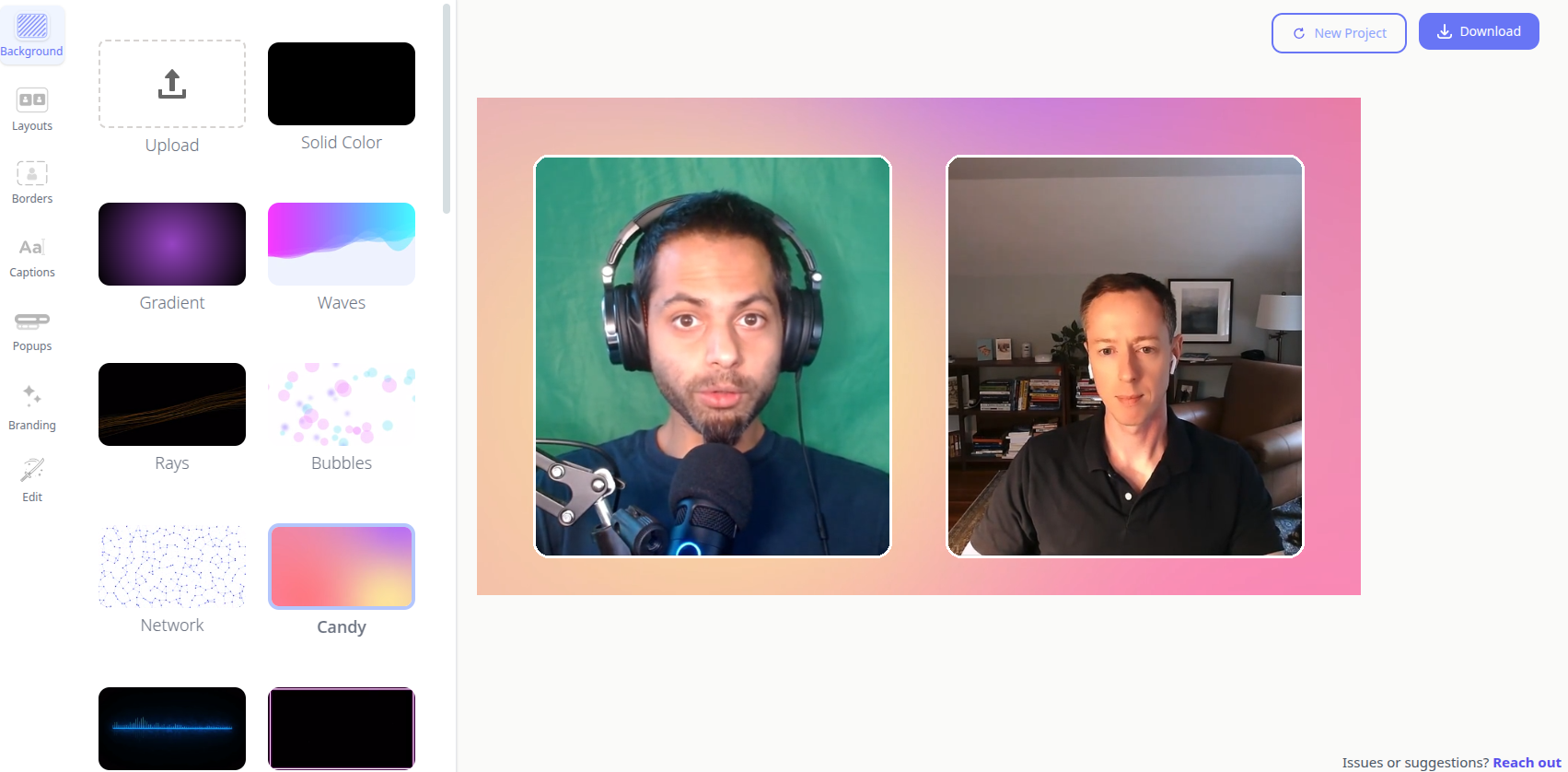

Based on that, I spent the last two weeks shifting the focus of Katana to a more design-heavy direction, with a focus on branding, visuals and layouts (See the demo below).

I didn't really change any of the core features, I just added some extra backgrounds and borders, but in retrospect, it seems like "Making your Zoom recording look good" is a much more compelling pitch than "Auto-edit a recording".

In terms of what tools people mentioned they liked, people mentioned tools like Canva. And if you buy my analogy of editing video as being similar to editing software, then it makes sense that if my model is Squarespace (not a coding tool, but rather a design tool that outputs websites), it makes sense to build a design tool that outputs video podcasts.

My gut feeling is that, I could skimp on recording quality and editing features, but If I could make it ridiculously easy to make a Zoom recording look like the professional video podcasts you see on YouTube, I'd have something far more compelling for far more people than Descript or Riverside. This also makes sense for me - you compete with these established companies not by trying to be like them, but by building something different that's not like either of them, but which still gets someone what they wanted in a different (and more compelling) way.

What about the AI?

Of course there's AI in Katana. There are features that could have been papers, but I don't think people care about the details, just as I don't think they care about my app architecture or which analytics tool I use.

I also still think it's worth building Katana as a tool to automate video editing, and that is still very much the goal. You might point to all the other companies out there, and what makes Katana different from all the other video editing products?

Let me illustrate with concrete examples. If these companies knew what they were doing, Descript would have better filler word removal, and Opus Clips would have a better clipping algorithm.

That these comapanies haven't figured out the easy stuff that aligns with their core competencies, I don't think they're going to figure out the hard stuff that doesn´t align with their core competencies.

I have high convinction that "turning Zoom recordings into video podcasts" is useful, so I've built a tool that does that. I know clips and filler word removal are useful, and so I'll have better clips than Opus, and better filler word removal than Descript. I have high confidence that if all I did was that, then I'd have a compelling product, and I could support myself and a small team

That would put Katana is a place to start looking at the hard stuff, like getting AI to create edits that are consistently as good as a trained professional editor. I'm excited to do hard stuff because it's hard. I'll be excited to experiment with AI research and push the state of the art.

But at the end of the day, I don't think most users would care about the details as long as it just works. That's why I haven't plastered the word AI all over my app, even though I feel like I have more of a right to claim having an "AI company", moreso than most.